TREC Dynamic Domain Track 2015 Guidelines

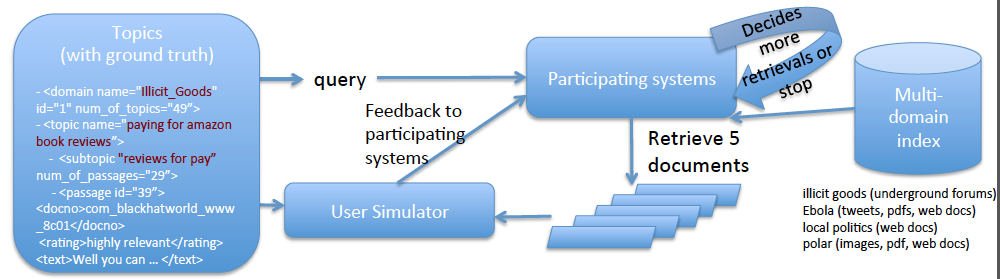

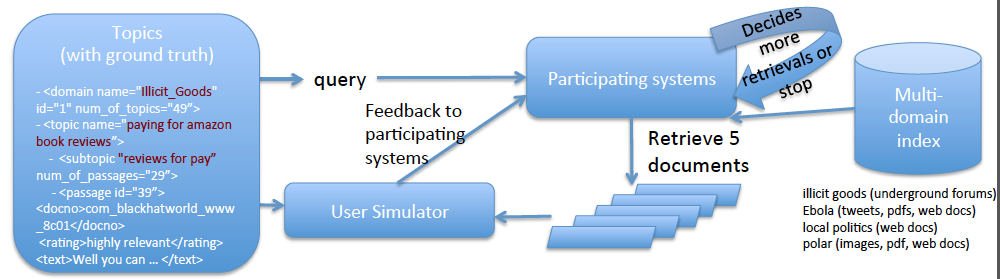

The goal of the dynamic domain (DD) track is to support research in dynamic, exploratory search of complex information domains. DD systems receive relevance feedback as they explore a space of subtopics within the collection in order to satisfy a user's information need.

- 1. Participation in TREC

- 2. Domains and datasets for 2015

- 3. Topics for 2015

- 4. Task Description

- 5. User Simulator (Jig) and Feedback Format

- 6. Task Measures

- 7. Run Format

- 8. Requirements

1. Participation in TREC

In order to take part in the DD track, you need to be a registered participant of TREC. The TREC Call for Participation, at http://trec.nist.gov/pubs/call2015.html, includes instructions on how to register. You must register before May 1, 2015 in order to participate.

The datasets and relevance judgments will be made generally available to non-participants after the TREC 2015 cycle, in February 2016. So register to participate if you want early access.

The datasets and relevance judgments will be made generally available to non-participants after the TREC 2015 cycle, in February 2016. So register to participate if you want early access.

2. Domains and datasets for 2015

For 2015 there are four domains, each with different data:

Illicit Goods: this data is related to how illicit and counterfeit goods such as fake viagra are made, advertised, and sold on the Internet. The dataset comprises posts from underground hacking forums, arranged into threads.

Ebola: this data is related to the Ebola outbreak in Africa in 2014-2015. The dataset comprises tweets relating to the outbreak, web pages from sites hosted in the affected countries and designed to provide information to citizens and aid workers on the ground, and text files from West African and other government sources.

Local Politics: this data is related to regional politics in the Pacific Northwest and the small-town politicians and personalities that work it. The dataset comprises web pages from the TREC 2014 KBA Stream Corpus.

Polar Sciences: this data comprises web pages, scientific data (HDF, NetCDF files, Grib files), zip files, PDFs, images and science code related to the polar sciences and available publicly from the NSF funded Advanced Cooperative Artic Data and Information System (ACADIS), NASA funded Antarctic Master Directory (AMD), and National Snow and Ice Data Center (NSIDC) Arctic Data Explorer.

All the datasets are formatted using the Common Crawl Architecture schema from the DARPA MEMEX project, and stored as sequences of CBOR objects. See the datasets page for more details.

Illicit Goods: this data is related to how illicit and counterfeit goods such as fake viagra are made, advertised, and sold on the Internet. The dataset comprises posts from underground hacking forums, arranged into threads.

Ebola: this data is related to the Ebola outbreak in Africa in 2014-2015. The dataset comprises tweets relating to the outbreak, web pages from sites hosted in the affected countries and designed to provide information to citizens and aid workers on the ground, and text files from West African and other government sources.

Local Politics: this data is related to regional politics in the Pacific Northwest and the small-town politicians and personalities that work it. The dataset comprises web pages from the TREC 2014 KBA Stream Corpus.

Polar Sciences: this data comprises web pages, scientific data (HDF, NetCDF files, Grib files), zip files, PDFs, images and science code related to the polar sciences and available publicly from the NSF funded Advanced Cooperative Artic Data and Information System (ACADIS), NASA funded Antarctic Master Directory (AMD), and National Snow and Ice Data Center (NSIDC) Arctic Data Explorer.

All the datasets are formatted using the Common Crawl Architecture schema from the DARPA MEMEX project, and stored as sequences of CBOR objects. See the datasets page for more details.

3. Topics

Within each domain, there will be 25-50 topics that represent user search needs. The topics are developed by the NIST assessors. A topic (which is like a query) contains a few words. It is the main search target for one complete run of dynamic search. Each topic contains multiple subtopics, each of which addresses one aspect of the topic. The NIST assessors have tried (very hard to) produce a complete set of subtopics for each topic, and so we will treat them as the complete set and use them in the interactions and evaluation.

An example topic for the Illicit Goods domain could be found here. It is about "paying for amazon book reviews" and contains 2 subtopics.

You can find the topics (with ground truth) on the Tracks page in the TREC Active Participants area after you register to participate in TREC.

An example topic for the Illicit Goods domain could be found here. It is about "paying for amazon book reviews" and contains 2 subtopics.

You can find the topics (with ground truth) on the Tracks page in the TREC Active Participants area after you register to participate in TREC.

4. Task Description

Your systems will receive an initial query for each topic, where the query is two to four words and additionally indicates the domain by a number 1, 2, 3 or 4. In response to that query, systems may return up to five documents to the user. The simulated user will respond by indicating which of the retrieved documents are relevant to their interests in the topic, and to which subtopic the document is relevant to. Additionally, the simulated user will identify passages from the relevant documents and assign the passages to the subtopics with a graded relevance rating. The system may then return another five documents for more feedback. Systems should stop when they believe they have covered all the user's subtopics sufficiently. The subtopics are not known the system in advance; systems must discover the subtopics from the user's responses.

The following picture illustrates the task:

The following picture illustrates the task:

5. User Simulation Harness ("Jig") and Feedback Format

The system interactions with the user will be simulated using a jig that the track coordinators will provide to you. This jig runs on Linux, Mac OS, and Windows. You will need the topics with ground truth to make the jig work, and your system may only interact with the ground truth through the jig.

The jig package can be found at https://github.com/trec-dd/trec-dd-simulation-harness. That page includes instrucions on how to set up and run the jig with an example oracle system, and how to write your system to connect with the jig.

The jig package can be found at https://github.com/trec-dd/trec-dd-simulation-harness. That page includes instrucions on how to set up and run the jig with an example oracle system, and how to write your system to connect with the jig.

6. Task Measures

The primary measures will be Cube Test and µ-ERR. Scoring scripts are included as part of the jig. We will also likely report other diagnostic measures such as basic precision and recall.

The Cube Test is a search effectiveness measurement that measures the speed of gaining relevant information (could be documents or passages) in a dynamic search process. It measures the amount of relevant information a search system could gather for the entire search process with multiple runs of retrieval. A higher Cube Test score means a better DD system, which ranks relevant information (documents and/or psaasages) for a complex search topic as much as possible and as early as possible.

Reference: Jiyun Luo, Christopher Wing, Hui Yang, Marti Hearst. The Water Filling Model and The Cube Test: Multi-Dimensional Evaluation for Professional Search. CIKM 2013. Link

µ-ERR is an extension to the cascade model of ERR by defining an outer loop in which the user changes the entity profile each time they find a useful piece of new information.

The Cube Test is a search effectiveness measurement that measures the speed of gaining relevant information (could be documents or passages) in a dynamic search process. It measures the amount of relevant information a search system could gather for the entire search process with multiple runs of retrieval. A higher Cube Test score means a better DD system, which ranks relevant information (documents and/or psaasages) for a complex search topic as much as possible and as early as possible.

Reference: Jiyun Luo, Christopher Wing, Hui Yang, Marti Hearst. The Water Filling Model and The Cube Test: Multi-Dimensional Evaluation for Professional Search. CIKM 2013. Link

µ-ERR is an extension to the cascade model of ERR by defining an outer loop in which the user changes the entity profile each time they find a useful piece of new information.

7. Run Format

In TREC, a "run" is the output of a search system over all topics. In the DD track, the runs are the output of the harness jig. Participating groups typically submit more than one run corresponding to different parameter settings or algorithmic choices. The maximum number of runs allowed for DD 2015 is five from each team.

We use a line-oriented format similar to the classic TREC submission format:

where 'on_topic' is 1 or 0 if the document is relevant to any subtopic, and the subtopic_rels indicate graded relevance for the document for all relevant subtopics. For instance:

We use a line-oriented format similar to the classic TREC submission format:

topic_id docno ranking_score on_topic subtopic_rels

where 'on_topic' is 1 or 0 if the document is relevant to any subtopic, and the subtopic_rels indicate graded relevance for the document for all relevant subtopics. For instance:

DD15-1 1335424206-b5476b1b8bf25b179bcf92cfda23d975 503.000000 1 DD15-1.4:3 DD15-1 1321566720-7676cf342cb035e8e09b0696ae85d3a7 883.000000 1 DD15-1.1:2 DD15-1 1322120460-d6783cba6ad386f4444dcc2679637e0b 26.000000 1 DD15-1.4:2|DD15-1.4:2|DD15-1.4:2|DD15-1.2:2|DD15-1.2:2|DD15-1.1:3|DD15-1.4:2|DD15-1.4:2 DD15-1 1322088960-3f3465ecc86554b4b449539a46a5e7ba 709.000000 1 DD15-1.6:2|DD15-1.6:2|DD15-1.4:2|DD15-1.4:3 DD15-1 1321860780-f9c69177db43b0f810ce03c822576c5c 470.000000 1 DD15-1.1:3

8. Requirements

Participants are expected to submit at least one run by the deadline.

Runs may be fully automatic, or manual. Manual indicates intervention by a person at any stage of the retrieval. We welcome unusual approaches to the task including human-in-the-loop searching, as this helps us set upper performance bounds.

Runs may be fully automatic, or manual. Manual indicates intervention by a person at any stage of the retrieval. We welcome unusual approaches to the task including human-in-the-loop searching, as this helps us set upper performance bounds.